Internationalization and Cats: Overview

In this week’s Embedded.fm episode, we discussed user interfaces in embedded systems. That reminded me of the incredible information download I got from Ken Lunde, author of CJKV Information Processing, on Episode 26 about the basics of how internationalization and localization work on embedded devices with displays. Asian languages are more challenging than Latin alphabets so we’ll spend more time on that next week.

Embedded systems are resource constrained, by definition. They function without the huge font packages or easy remote updates associated with websites or PC applications. Instead, an embedded system usually has a fixed set of fonts and characters in large external flash used as read-only memory (ROM). To create the ROM, developers and designers still need to figure out which characters and which fonts to use. The way the characters are represented in the code is also important (you should choose Unicode but I’ll explain in more detail), but not as critical as how the characters are represented in your ROM space. Finally, we need to talk about how internationalization fits in with a project schedule.

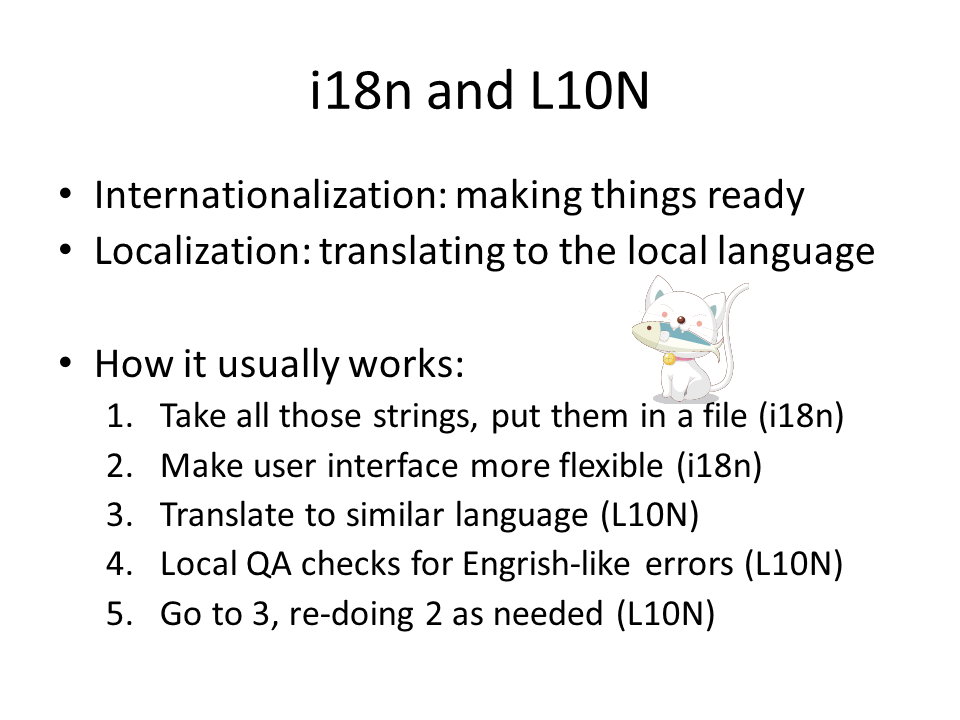

To get started, knowing the common terminology is key to sounding like you know what you are talking about. “Internationalization” has 20 letters and is often abbreviated as i18n (lowercase is intentional: the lowercase “i” is easy to differentiate from the “1” or an “L”). It is the part of translation that involves understanding how the product will be affected; you’ll need to display much longer words, arrange numbers and dates different, possibly write in a different orientation (right-to-left or up-to-down). This is the part that changes major portions of the software.

Localization (12 letters so ten of those are hidden to become L10N, capitalized this time) is the process of translating the product into a particular language. Once your software can display the necessary characters (i18n), whatever your screen puts up to say konnichiwa (hello) is part of L10N.

For most products, the goal is to iterate through i18n and L10N. For example, a US product might be translated to French initially. This requires an expanded character set and putting all of the strings in a file so they can be replaced. While US dates are presented as month/day/year, European French dates are presented as day/month/year. You will probably also need to have a discussion of how to display the diacritical marks (accents) on characters: do you shrink the letter to put a circumflex over it (â)? What about for the capitalized version (Â)? Given a small display, designed for the US market, this will be a design choice: make all of the letters smaller or let the odd ones look funny. Or do you simply remap the characters to their closest proxy and ignore proper French spelling? There are several such surprises awaiting your initial i18n and L10N.

Once French is in the works, perhaps German is next. German is tough as the words are long, really, very long. Can you display supercalifragilisticexpialidocious? It is a long word in English but not in German. Suddenly, your 32 character wide display may not be large enough to hold a single word. Should you put in scrolling or make your fonts smaller and use multiple lines for long words?

Each language has its quirks. For a while, each translation will cause the software to change, to accommodate a difference. As time goes on, the flexibility will be built into the software so that the process becomes easier, until you get to the Asian languages (CJKV: Chinese, Japanese, Korean, Vietnamese).

When translating to a European and North American markets, you may hear the term FIGS which stands for French, Italian, German, Spanish. This block of languages will likely expand your character set from 128 ASCII characters to nearly 200. However, in code, the characters will still fit into a single byte. Strings will still be made up of byte-sized characters and fonts will be lookup tables (just bigger ones) so speed will not be significantly affected (unless you have to move it from internal flash to external but even that is probably not going to impair the usability of the device).

Fridge in Translation

This is all great in theory but let me give an example. Say we are building a consumer refrigerator and want to add an LCD to display messages. Initially, the messages are all fixed: the software might be showing them as bitmaps. When translated to a different language, the software would not be affected, only the bitmaps need to be localized.

More realistically, the software would show dates and times in different locales (locations + languages: Mexican Spanish is different than that in Spain). When going from English to E-FIGS, you’ll need to modify the software to display the dates correctly, to display the correct string for the locale, and to have a font that supports all of the letters.

On the other hand, we know what the LCD will display at all times. If the designer chooses a long word, we can ask for a shorter one. If we don’t want to deal with capital diacritical marks, we can pass that software limitation to the translators. If the fonts take up too much storage, we might optimize out the unused characters (who really needs a semicolon on a fridge? Let’s replace it with a snowflake emoji). Even if we opt to get information from another source (i.e. weather on the web), we have control over the display and can choose to not support certain information if it is not localized in our system.

Now suppose product marketing comes to us with the idea of allowing users to text the fridge so it can act as a family blackboard. Suddenly, we need to support anything the users might text and the software has to have rules for breaking up too-long words. Not only do we need to support all characters in the language (letters and punctuation), do we need to also handle characters outside our fonts and what does the display do when a character is unrecognized?

If we add a keyboard (real or touchscreen) to the fridge to allow users to input a grocery list that can sync with a phone application, the problem again expands (though it is worse for Asian languages, more on that soon). However, that’s beyond my scope today.

Brief Introduction to Unicode

Keeping in mind this amazing IoT fridge idea, how is the fridge going to represent characters internally? In ASCII, “A” is represented in binary as 0100 0001 (or in hex 0x41).

ASCII is a standard for how to put letters into numbers, encoding the characters in the machine. ASCII supports 127 characters: the 94 letters, numbers, and symbols in the US character set as well as 32 control characters (line feed, bell) and NULL (0x00). One of the great things about ASCII is that it is likely something you’ve already seen. A is 0x41, B is 0x42, and so on. It lets a developer build a look-up table to represent the character images (usually bitmaps).

However, once we start internationalizing the device, ASCII is no longer the encoding standard because it cannot represent the additional 94 characters for FIGS (or the several thousand needed for other languages).

Instead, we’ll encode things according to Unicode. The definition of Unicode has changed over the years. It used to be the standards body as well as the actual encoding. That was confusing so now Unicode is the standard that defines the encodings. And we could talk about different encodings (i.e. ShiftJIS for Japanese or UTF16) but there is only one correct encoding: UTF-8.

The fantastic news with UTF-8 is that it looks just like ASCII for the first 127 characters. You can go tell your manager that you have internationalized the strings to use UTF-8’s single-byte encoding right now. Further, most FIGS letters are one byte per character as well; if that first block of localization is to European markets, it won’t require changing how strings are managed in the system, only how we map some additional letters.

However, Cyrillic alphabets and Asian characters need more bytes per character. If it takes 6 bytes to say “hello” in English, it will take more in Russian because their alphabet requires more space. On the other hand, Chinese usually requires only 2 bytes per character which is actually one ideograph which may be a whole word or thought. It is very dense information-wise.

Note: since we can’t really call them characters since that indicates letters in English and single bytes in C, each UTF-8 item is called a code point (the 0x41 code point encodes the letter “A”). I am not very good with that term; please forgive me for introducing it so late and then likely never using it again.

We want to use UTF-8 because that is what most everyone else uses (including your phone, so getting BLE notifications from a phone app means supporting UTF-8). Even better, you probably only need to support one and two byte code points due to the way that Unicode laid out the encoding to have a basic multilingual plane (BMP). Unicode not only took the standards from associated governments, they put the most frequently used code points in one place the BMP. Essentially, they decided to put the most useful stuff in the first two bytes. While all of Chinese can be found in Unicode and accessed via UTF-8, the only ones you need to support are almost certainly in the BMP.

As tempting as it is to talk more about Asian characters, once you know about characters sets and UTF-8 encoding, you can get started with the technical details of i18n. However, before kicking off the project, there are a few more details to consider.

Project Management

Both i18n and L10N are significant investments in terms of cash and resources. I often hear “we need to support all languages from the day we ship!” This is not realistic unless this is a minor revision of an existing, widely localized product.

I have a template schedule for the first i18n and L10N of an embedded device with a small screen (like my fridge from above). Of course, the actual time depends on the complexity of your system. Also note that this focuses on the device software, you may need to do the same for web software and device packaging. As iterations occur, there will be reusable pieces. For example, the font packing tool will need to be written but will likely only require minor updates for new languages. Both i18n and L10N get easier with repetition though the first time can be quite a logistical hurdle. There are companies that help you through the process (such as POEditor) but it still isn’t going to be easy.

Many companies find that the software isn't the most difficult piece; instead identifying local resources becomes a major stopping block. For a polished, built-here look, the product interface needs to be redesigned by a native designer, one who not only speaks the language but is also familiar with the local customs and idioms. They may have font preferences as well as display layout changes necessary for their locale. Next, the product will need translators to localize all of the strings in the system in a way that makes sense in the context of the device. Finally, after the redesigned screens and translated strings are in the firmware, the product needs to go through QA with native speakers to avoid context mistakes.

Next week, I’ll finish up device internationalization with a discussion of Asian languages. Accessing one out of thousands of characters in a reasonable amount of time can be a significant challenge.