TyPEypt- A Robot That Types and Teaches

I've been writing about Ty for months now. You've seen him grow from disembodied arm to something more. Well, now I've entered Ty in a contest, the NVIDIA Developer Challenge. You can see (and vote for) my entry; it includes a video and a slide deck.

You will have to click to see the video but let me run you through my entry and slide deck.

Wow, I really know how to start a presentation, right? But there are some rules to this contest. The device had to be NVIDIA Jetson TX1 or TX2 based. That is a fairly high price to pay to enter a contest. Or it would be except a friend gave me the TX2.

Next, it was supposed to be in a category: AI Homes (security, home automation), Dr. Robot (smart health), or Till the Earth (smart farming, drone sprayers). There was a catch-all category called Geek Out where they mentioned service robots, humanoid robots, and voice assistants. Of course, Ty doesn't fit in any of these categories so I chose the one I could make jokes about.

When I got to judge the Hackaday Prize, I spent a lot of time trying to figure out what in the heck each entry was supposed to do. Thinking about that, I wanted to lay out my goals as simply as possible.

On the other hand, I never have just one goal.

My expectations for the contest aren't really that high. It seems to be quite focused on making "a powerful AI solution built on the NVIDIA Jetson platform." Except, I'm not really doing much with AI.

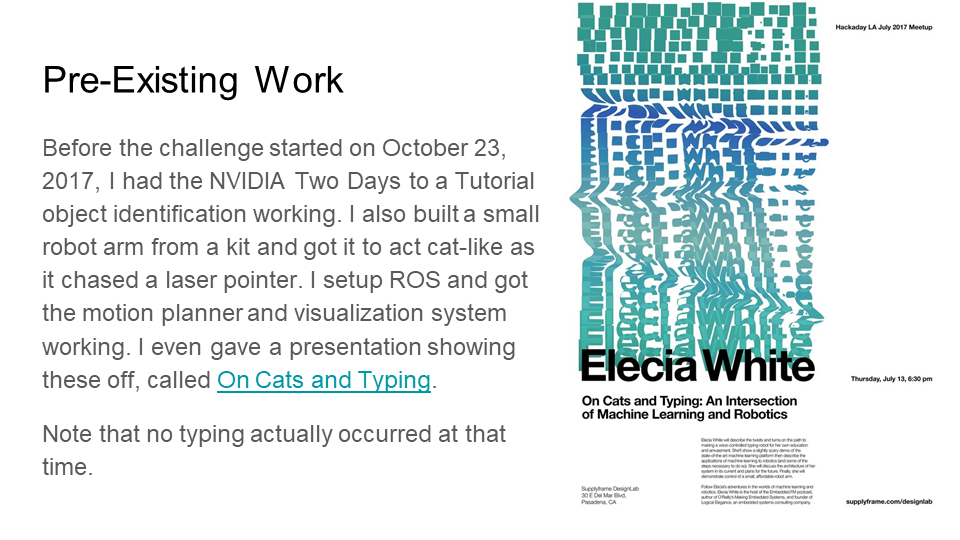

Still, I wanted to enter because it forced me to bundle the code up into mostly-working and mostly-documented. The contest is a good line in the sand for getting things done. I seem to recall I felt that way about another event involving my typing robot called On Cats and Typing.

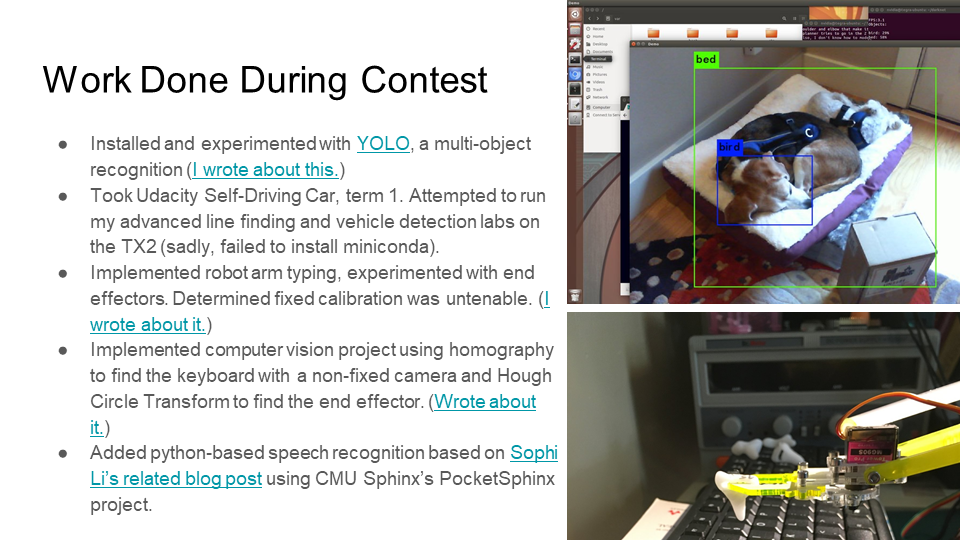

It was a good exercise, I did get some things done on my typing robot. You may have read about when I installed and experimented with YOLO, a multi-object recognition

We talked on the podcast about the Udacity Self-Driving Car nanodegree and my experiences with that. I tried to apply it to this contest and attempted to run my advanced line finding and vehicle detection labs on the TX2. I didn't get far, as I sadly failed install miniconda because the TX2 is ARM based.

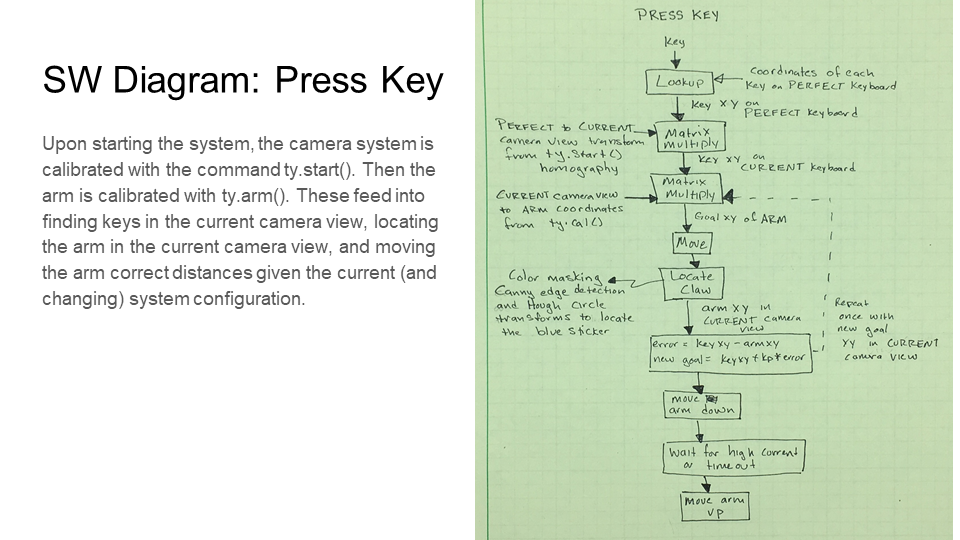

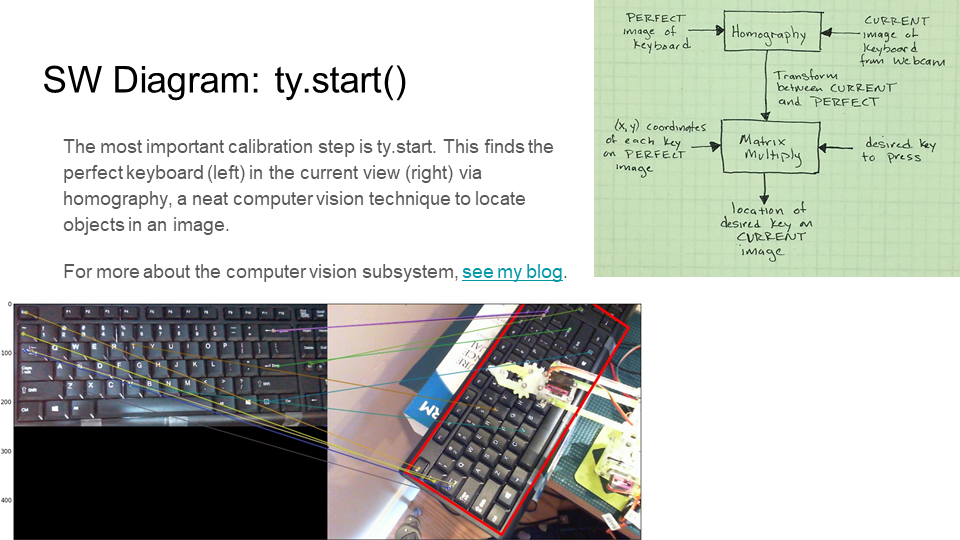

I wrote about implementing robot arm typing, experimenting with end effectors, and determining that fixed calibration was untenable. I also wrote about how I implemented computer vision in the project using homography to find the keyboard with a non-fixed camera and a Hough Circle Transform to find the end effector.

What you haven't heard is that, in an effort to put in some AI stuff, I added python-based speech recognition based on Sophi Li’s related blog post using CMU Sphinx’s PocketSphinx project.

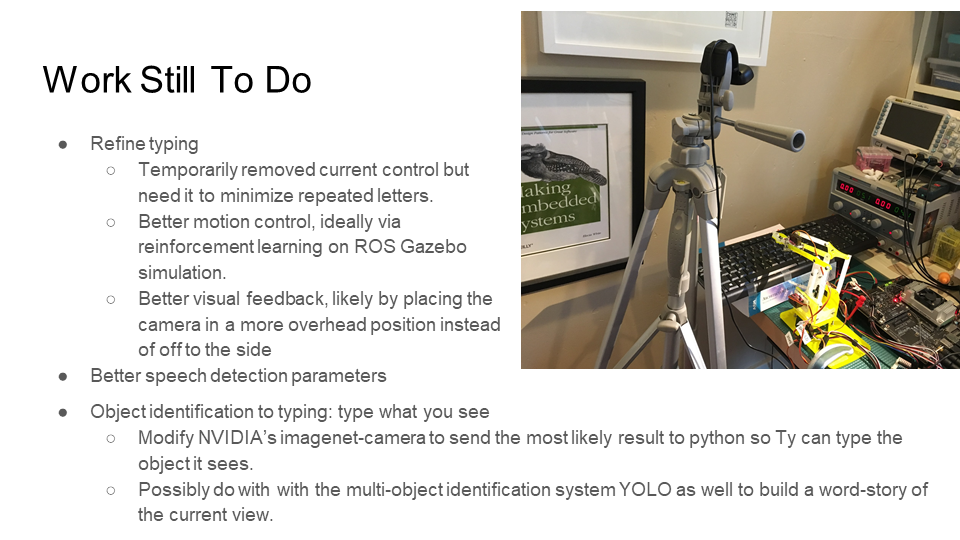

Of course, I didn't finish all I wanted to. Who does? And now I have a plan for the near future to make the system better.

Having given an idea of the goals of the system and the overview, I dove into the hardware.

It starts with the Jetson TX2, still on its development board. The TX2 is connected via I2C to a servo controller which connects to a current monitoring system (ADC, I wrote about this). This connects to a 4 degrees of freedom (DOF) robotic arm. I know the TX2 should rightfully control a state of the art robotic arm intended to do something wildly complicated like welding repairs in an underwater city or making perfect sourdough bread. However, the MeArm costs $50. And I want to show the advantage of incredible brains over inexpensive brawn.

The software is written in Python using OpenCV2 for computer vision and CMU's PocketSphinx for speech detection. My code is all open source, freely licensed and on GitHub. The high level is fairly straightforward but it gets more complex as we dig in.

I really need to write more about this. From identifying a goal key to actually pressing that key, then releasing it, is a lot of software. And it is a lot of software that needs a bit more attention - it doesn't work as well as I want (I suspect a bug or ten).

And yes, my last post, about the computer vision part was written partially so I could have it done for this contest.

So now we are going to get into the judging criteria. The first time I ran through this, I realized that entering my project was hopeless. I guess I shouldn't tell you that. On the other hand, you can see just how much spin I added.

The first judging criteria was Use of AI and Deep Learning and the note for this category was AI should be a part of your creation!

Uh-oh. Does computer vision count? When I talked to Dusty Franklin on the Embedded podcast, he seemed to say yes. Also, this category was one reason I was fairly thrilled to get the speech detection into the project. Even though the detection was fairly random and we had to yell at it ("helicopter!" led to helicopter mostly but occasionally it was parsed as "I don't know").

I wonder if saying robotics is more fun than AI might be poking a stick at the judges. Ahh, well, entry submitted.

The next category was equally difficult for me to deal with: Quality of the idea and How cool and creative is your concept?

It is a typing robot. You get it right? And it uses a terrible arm because Shaun Meehan told me to get a robot arm that is completely overpowered or one that is completely underpowered for the task to allow for humor. Guess which one of those options was cheaper.

Sigh, what if they think it is stupid? What if they don't see the (airquote) possibilities? What if they don't laugh?

The next category was Quality of work with the question, How well was your idea executed and how well does it leverage NVIDIA Jetson TX2 or Jetson TX1?

I had just reached the point of "well, heck, I don't know" of writing my entry. I mean, it uses the TX2. I couldn't have done it on a Cortex-M4 without a lot more work because I used python and all those vision libraries and speech detection pocketsphinx. It uses the TX2, so it counts, right? Right?

The next criteria is Design of your project and How visually and aesthetically robust is your project? So I started to think about what the project really is. I mean, all along Ty has been as much about the writing as it has been about the hardware. My goal here is to learn and share so maybe I should start pushing that at the judges.

Only a few more judging criteria left. The next one is Functionality of your project with the question How does it perform?

Giving up on the judges, I decide to simply grade myself. I should have added that I learned about video editing which wasn't nearly as difficult as I feared (I used iMovie).

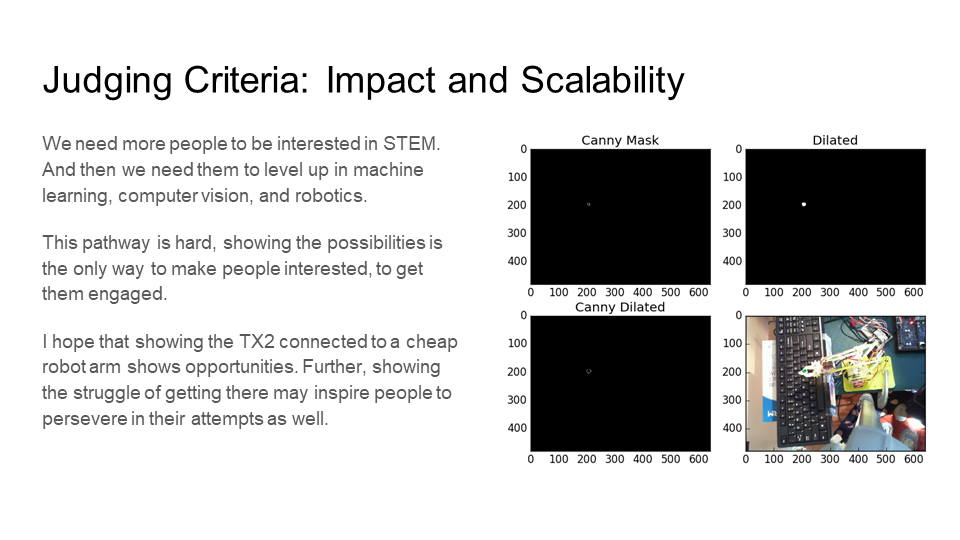

Finally, my nemesis judging criteria: Impact and scalability and its impossible question Can your solution change the world?

To answer the question, no. My project will not change the world. That is a very high bar for a contest that runs for four months and only awards $10k as the maximum prize.

I mean, I want to do good stuff and I want to make a difference and all that but this is my hobby project, worked on in between paying gigs and the rest of life. You are joking about the changing the world thing, right?

Yeah, my final slide for that turned out better than my first several drafts. It is almost sappy in its inspirationalness.

I closed the slide deck out with three lists of resources: ones I used, ones I created before the start of the contest, and ones I created during the contest. You don't want pictures of those, the links might be useful to you.

Resources I used for the contest:

- Two Days to a Demo and the imagenet-camera

- Udacity Self-Driving Car term 1

- TensorFlow

- Keras

- Python OpenCV 3, book and tutorial

- Robot Operating System book

- YOLO: you only look once object identification

- Adafruit PCA9685 PWM Servo and I2C Python libraries

- MeArm library (with some modifications)

- CMU Sphinx

- Sophi Li’s CMU PocketSphinx for Python post

Resources I created before the contest:

- Github for Typeypt: test and laser_cat_demo

- My Arms! They Are Here!: Planning my project and exploring TX2’s object identification

- The Sound of One Arm Tapping: Building the MeArm from a flat pack

- A Robot By Any Other Name: Breaking the project into pieces and goals

- Imagine A World of Robots: Exploring Robot Operating System

- On Cats and Typing: A status update on laser following and machine learning

- What If You Had a Machine Do it (related podcast episode)

- Related video of talk at Hackaday DesignLab Meetup

- Completely Lacking Sense: Adding current monitoring to the motor control

Resources I created during the contest:

- Github for Typeypt: prototyper

- What’s Up With Ty: Trying to learning enough machine learning concepts to apply them to typing, adding YOLO to the TX2

- Pressing Buttons: Exploring end effectors and calibration problems due to using a $50 robotic arm

- Video of Ty typing “hello” for the first time

- Hand Waving and OpenCV: Using computer vision to solve calibration problems

- The Good Word About AI: Podcast with NVIDIA’s Dusty Franklin about TX2

- NVIDIA Jetson Developer Challenge Video

So that's my entry. If you like it and don't mind getting emails about contests (or have a burner email address or six), please vote for it on ChallengeRocket.